系统环境

Ubuntu16.04

安装Docker

1

2

| apt install docker

apt-get install docker.io

|

下载镜像

1

| docker pull ubuntu:16.04

|

运行容器

1

| docker run -ti ubuntu:16.04

|

不要关闭这个终端

安装JDK

1

| tar -zxvf jdk-8u11-linux-x64.tar.gz -C /opt

|

配置Java环境变量

安装Hadoop

1

| tar -zxvf hadoop-2.9.2.tar.gz -C /opt

|

在/opt/hadoop-2.9.2/etc/hadoop目录下,修改如下配置:

core-site.xml

1

2

3

4

5

6

7

8

9

10

| <configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/hadoop-2.9.2/tmp</value>

</property>

</configuration>

|

hdfs-site.xml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| <configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>master:50090</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/opt/hadoop-2.9.2/tmp/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/opt/hadoop-2.9.2/tmp/dfs/data</value>

</property>

</configuration>

|

yarn-site.xml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

| <configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>20480</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>2048</value>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>2.1</value>

</property>

</configuration>

|

mapred-site.xml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| <configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>master:10021</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>master:19889</value>

</property>

</configuration>

|

安装SSH服务,设置免密登录

1

2

3

4

5

| apt-get install openssh-server

service ssh start

service ssh status

ssh-keygen -t rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

|

三个节点的authorized_keys文件都写上。

提交安装完毕的容器

1

2

3

| docker commit {开启的容器ID号} hadoop/master

docker commit {开启的容器ID号} hadoop/slave1

docker commit {开启的容器ID号} hadoop/slave2

|

启动容器

1

2

3

| docker run -ti -h master hadoop/master

docker run -ti -h slave1 hadoop/slave1

docker run -ti -h slave2 hadoop/slave2

|

配置hosts

ifconfig命令获取各节点ip,如:

1

2

3

| master:10.0.0.5

slave1:10.0.0.6

slave2:10.0.0.7

|

vim /etc/hosts,将如下配置写入各节点的hosts文件

1

2

3

| 10.0.0.5 master

10.0.0.6 slave1

10.0.0.7 slave2

|

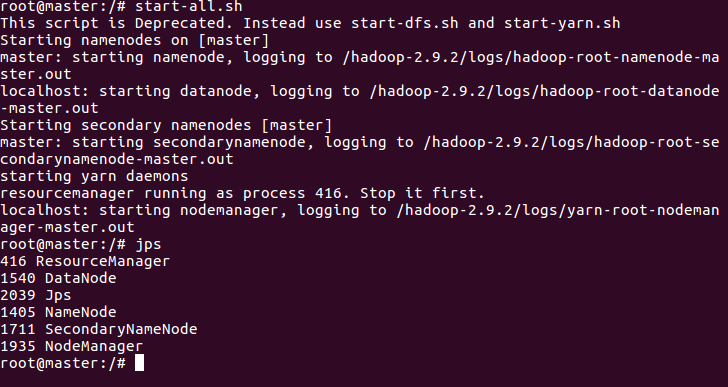

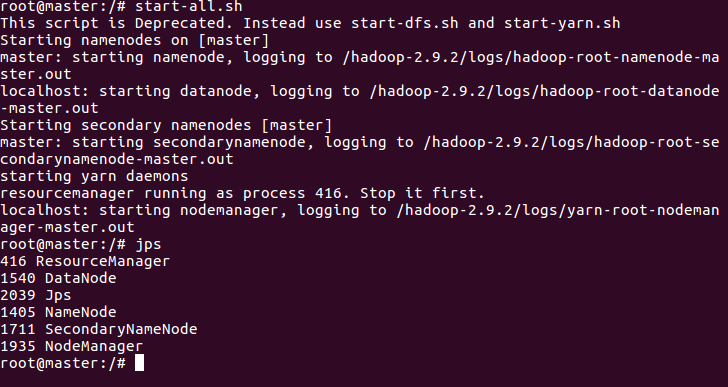

启动Hadoop

各节点上执行start-all.sh,启动Hadoop。

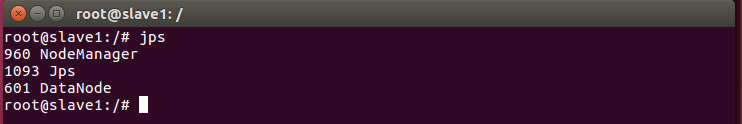

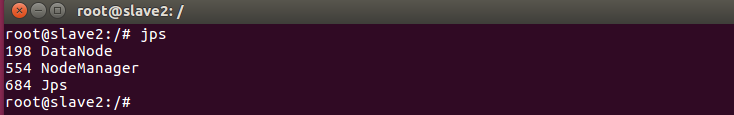

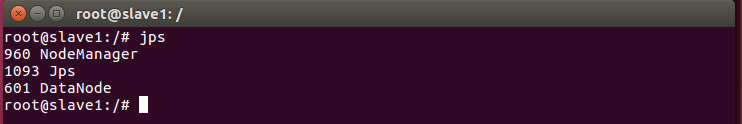

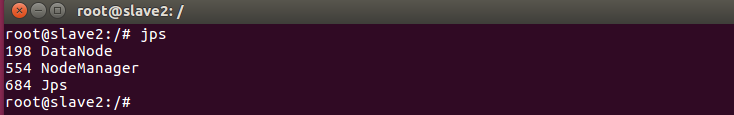

运行结果

matser

slave1

slave2

遇到的问题

下载镜像失败

docker pull ubuntu:16.04,失败

解决方法:配置国内镜像加速,/etc/docker/daemon.json中写入如下内容:

1

2

3

| {

"registry-mirrors":["https://docker.mirrors.ustc.edu.cn"]

}

|

重启docker

1

2

| systemctl daemon-reload

systemctl restart docker

|

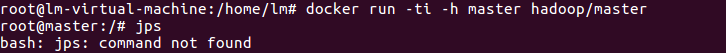

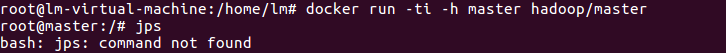

jps命令找不到

解决方法:Java的路径设置有问题,

/opt/hadoop-2.9.2/etc/hadoop目录下,修改hadoop-env.sh:

export JAVA_HOME=/opt/jdk1.8.0_11

安装的容器没有ifconfig命令

解决方法:

1

2

| apt-get update

apt install net-tools

|

Hadoop的镜像,保存为副本

将安装好Hadoop的镜像保存为一个副本。

docker commit -m "hadoop install" {开启的容器ID号} ubuntu:hadoop

docker启动容器,使用run命令:

docker run -ti ubuntu:hadoop

启动master、slave1、slave2容器

1

2

3

| docker run -ti -h master ubuntu:hadoop

docker run -ti -h slave1 ubuntu:hadoop

docker run -ti -h slave2 ubuntu:hadoop

|

参考资料

https://www.cnblogs.com/onetwo/p/6419925.html